Hello

You are reading Understanding TikTok. My name is Marcus. I was in the midst of listening to an already autumn suited Charli XCX/Fleetwood Mac Mashup (Dreams) when P.E. Moskowitz’s text Vibeocracy hit me hard with these few succinct words: “And then, of course, it turned out that vibes did not mean much.”

Today we talk all about 🥷 terror 🏴 on the platform.

TikTok alone will not turn you into a terrorist

The last couple of days in Germany have been all about a fatal knife attack in the west German city of Solingen (Islamic State group claims responsibility) that has placed immigration and Islamist terror at the top of the political agenda (FT) days ahead of key elections (Deutsche Welle). It took traditional media a couple of hours to headline: Tiktok terrorists and Islamism influencers: Almost all Islamist attackers have radicalized themselves online (NZZ). Where else, one might add.

TikTok terrorists (NZZ). A new compound word, made well known by political scientist and “terror expert” Peter R. Neumann (King’s College London). As a former journalist i understand the appeal of alliterations, yet i find this one especially imprecise.

It is undebatable that terror organizations and extremists try to persuade on any given platform. But the public discussion around TikTok especially in the context of terror seems rather uninformed while under-researched and pretty overheated to me.

Here is an example: A statement by Neumann in an interview with NZZ goes like this “if you click three times on a video that has to do with Palestine, for example, then you will almost only see corresponding content”. But current research by Anna Semenova, Martin Degeling, and Greta Hess shows that: “TikTok’s recommender system introduces content from diverse interests periodically to keep users engaged and help them discover new topics” (Auditing TikTok).

And of course. Not everyone and not every criminal is a terrorist. Neumann himself points out in an interview: “by definition, a paranoid-schizophrenic violent criminal cannot be a terrorist”. And TikTok alone can not be made responsible for so-called “speed radicalisation” (Spiegel) whatever that might even mean, because radicalisation is a complex and timely process that can not be traced back to a singular application. Or as CNN reports: In light of an alleged terror plot on a Taylor Swift concert in Vienna, there is renewed concern about online extremism among youth. The 19-year-old alleged mastermind of the attack was radicalized online, Austrian authorities say, although it is unclear how. It is unclear. How.

Let’s have a more informed debate

Well. TikTok, right?! In order to lead a better informed discussion, here are some first observations concerning the who (bad actors), the how (strategies) and the whom (audience) in the context of islamist extremism (Verfassungsschutz) content on TikTok.

01) Audience: Terror suspects aged between 13 and 19

Terror organizations and extremists have diverse target audiences which they try to reach on a variety of suited platforms (e.g. Islamic State Content on Pinterest). In the center of attention has been the aspect of successfully targeting and recruiting teenagers. In an academic study of 27 ISIS-linked attacks or disrupted plots Neumann has revealed that of the 58 suspects, 38 were aged between 13 and 19 (CNN). In order to reach this specific target audience it of course makes sense to use the tools at hand. Close to a 100% of young adults in Germany use social media, 91,5% of them intensively, which means they use at least one platform multiple times a day (How young adults use TikTok). These numbers will most likely be very similar in many other countries.

👉What to do:

More education of and communication among pupils, students, teachers, parents and decision makers alike. Encourage critical media consumption: How can you spot a propaganda clip? Where is the difference between freedom of speech and hate speech? How to cross-check information on platforms?

02) Bad Actors: From rock star preachers to execution footage

There is a variety of different bad actors in different parts of the world that are utilizing TikTok and other with different goals.

First reports about the Islamic State being active on TikTok for example date back to 2019 as WSJ reported: Islamic State Turns to Teen-Friendly TikTok, Adorning Posts With Pink Hearts. Research from The Institute for Strategic Dialogue found 11 Islamic State videos being shared openly on TikTok, including footage from executions in 2021: Hatescape: An In-Depth Analysis of Extremism and Hate Speech on TikTok. An investigation in 2023 on CaliphateTok: TikTok continues to host Islamic State propaganda showed an active Islamic State support network of 20 accounts with more than a million collective views.

There is wide range of actors and different approaches that can be identified. From Pro-Islamic State alternative news outlets that are operating multi-platform, multi-lingual disinformation operations under the guise of “media” (ISD) in Iraq to so called ‘rock star’ preachers influencing young people online (CNN) like Abul Baraa, a German-speaking Salafi preacher, described as “a gateway drug for more radical actors” or Ibrahim El Azzazi (Welt) or Raheem Boateng (Merkur).

👉 What to do:

More research here is needed to identify actors and strategies and to unwrap networks. Security authorities need a better understanding of platform specifics to better track and monitor content.

03) Strategies and Techniques: Coded Emojis, Sound Memes, and AI News

”Jihadi terrorists have historically adapted and leveraged new and emerging technologies for recruitment and operational ends” (The Soufan Center). Bad actors embrace all variations of contemporary communication. They apply the full spectrum of postdigital-propaganda (#130).

This includes different tactics for different platforms: For Facebook, that includes replacing terrorist language with emojis. For Twitter, that involves toning down the content in English compared with what's posted in Arabic. For Telegram, it means copying directly from official ISIS material. It's an evolving cat-and-mouse battle with tech companies and national security agencies (Politico). Coded Emojis as dog whistles are common amongst rightwing extremists but islamist terrorists can do it too. Just watch out for the ninja and black flag emojis. Pretty obvious. I know.

The Islamic State supporters exploit TikTok's 'sounds' feature (Sky News): A TikTok user appears in a photograph holding two children. In another, he is in a gym, training with a worn-out punching bag. Visually, the TikTok post appears innocuous, but the audio is not. It consists of a speech given in Arabic, laid over an excerpt of vocal music known as a nasheed. This one was composed in support of Islamic State. Just a touch or click away is much more graphic content. Sound propaganda is effective and not that well known among fact checkers. Will get back to that in the next newsletter.

And - of course - there are various variations including AI. In 2024, ISIS supporters launched an AI-generated program called News Harvest to disseminate propaganda videos (The Soufan Center). The Global Network on Extremism & Technology reports about AI-Powered Jihadist News Broadcasts: A New Trend In Pro-IS Propaganda Production?

👉 What to do:

More research here is needed to learn more about strategies, campaigns, their target audience, reach and success. A knowledge transfer is needed to better inform fact-checkers, security authorities and politicians.

Islam ≠ Terrorism. I repeat. Islam ≠ Terrorism

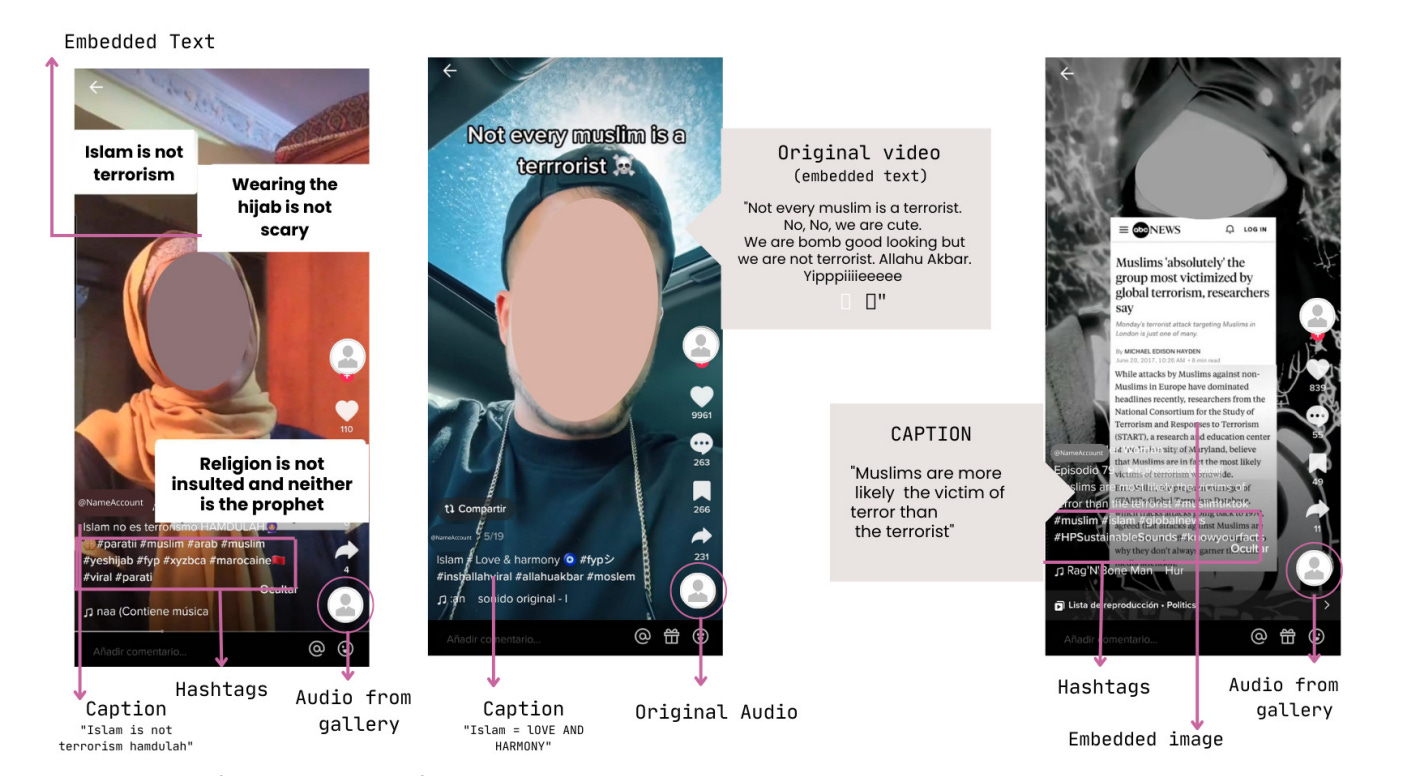

These are some preliminary observations. I hope they help to lead a better informed, rational debate. And probably kick-off some research projects. After all TikTok is at the same time used for spreading islamist extremist and terrorist propaganda and for leading a critical discourse to respond to the binomial “Islam = terrorism” in order to challenge this misconception (see Social Media & Otherness).

What else?

Why Creators Have Stopped Editing Their Content (Rolling Stone)

Unpaid political content is thriving on the platform – and it is meme-infused (NYT)

Inside the Gen-Z operation powering Harris’ online remix (CNN)

We don’t have time for stories anymore. A vibe is enough (via Jules Terpak)

An influencer is running for Senate. Is she just the first of many? (Vox)

TikTok Creators and the Use of the Platformed Body in Times of War (SM&S)

Thanks for reading. Speak soon. Ciao